TinyVerify - Translation Validation for ML Compilers

Translation Validation for the lightweight Tinygrad ML compiler

Project Overview

TinyVerify is a formal verification framework for validating compiler optimizations in Tinygrad, a minimalist machine learning framework. While traditional compilers like LLVM have established verification tools (Alive2), machine learning compilers face unique challenges in validating the complex transformations from high-level models to hardware-specific code. Our framework ensures these optimizations preserve program semantics by using SMT (Satisfiability Modulo Theories) solvers.

Technical Implementation

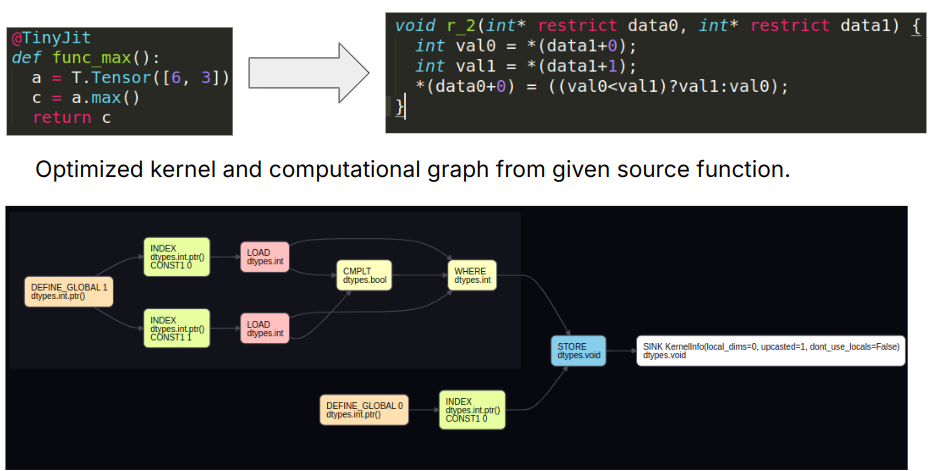

Kernel Validation

- Developed an end-to-end validation pipeline for GPU kernels generated by Tinygrad

- Validated transformations including operator fusion, constant folding, and memory layout optimizations

- Successfully verified 25 out of 49 fundamental operations (UOps) in Tinygrad’s IR

- Tested against real ML models including MNIST, validating hundreds of kernel optimizations

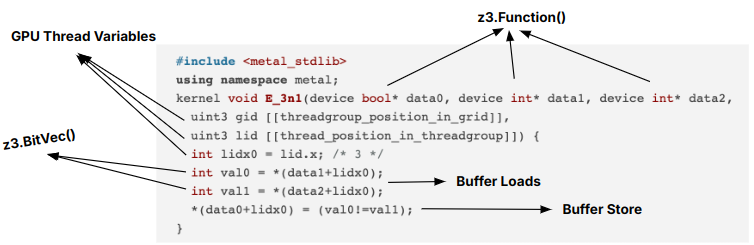

SMT Encoding Framework

We built a comprehensive encoding system using Z3 that handles:

- Primitive Types: Full support for various data types (bool, int16/64, uint32) using Z3 BitVectors

- Memory Operations: Modeled buffer operations (LOAD, STORE, INDEX) using uninterpreted functions

- Vector Operations: Support for fixed-size vectors leveraging Tinygrad’s lazy evaluation

- Control Flow: Implementation of WHERE (conditionals) and RANGE (loops) operations

- Floating Point: IEEE-754 compliant verification with configurable precision requirements

Undefined Behavior Tracking

Similar to Alive2 and LLVM, we implemented sophisticated UB tracking:

- Monitoring of overflow conditions and undefined memory access

- Propagation of “poison” values through operations

- Verification of optimization refinement - ensuring target code maintains or improves program determinism

Testing Framework

Litmus Tests

- Custom test suite with 12-15 Tinygrad functions

- Coverage of ~30 different IR rewrites

- Focus on tensor manipulations and matrix operations (GEMMs)

Pipeline Tests

- Automated extraction and verification of kernels from .pkl files

- Support for analyzing any Tinygrad model’s compilation process

- Integration with existing Tinygrad codebase through minimal modifications

Results and Current Work

Our framework successfully identified several precision-related issues in floating-point operations while validating Tinygrad’s optimization pipeline. Current development focuses on:

- Enhanced UB integration with Tinygrad’s IR

- Expanded floating-point support with IEEE compliance

- Extension to full compilation pipeline validation including scheduling

Visualization of a Tinygrad function’s kernel and its computational graph (IR)

Our Z3-based encoding architecture for kernel validation